Introduction

I’ve spent the last three years or so examining and analyzing Talent Acquisition Technology in order to inform and advise Directors and Heads of Talent Acquisition at some well-known corporations, as well as leaders of staffing firms around the United States. Technology focused on sourcing, sorting, selecting and ultimately recruiting talent has gone through a couple of hype cycles in that short time and it’s fair to say that some talent executives have struggled to keep up with the pace of change. The goal of this article is to dive into another one of those technological advancements, hopefully before the hype cycle even begins, and examine some ways this technology could impact the world, and specifically recruitment.

Definition: Generative Pre-Trained Transformer 3 is an autoregressive language model that uses deep learning to produce human-like text. It is the third generation language prediction model in the GPT-n series created by OpenAI, a San Francisco-based artificial intelligence research laboratory.

GPT-3 is Open AI’s latest language model, it’s been hailed as the most powerful language model ever built, with approximately 175 billion parameters. To put that into context, the second most powerful language model is Microsoft’s Turing NLG, which has roughly 17 billion parameters. Oh, and parameters are the configurable variables within the machine learning model which enable the estimation of the output from any given input. As a rule of thumb, the more parameters the better. To give this even more context, when I was studying advanced econometrics in my undergraduate degree, we created models with up to 5 parameters. More than 5 and either the models or our understanding of the output simply broke.

GPT-3 is the cutting edge of AI and it is deceptively simple. In essence it is an autocomplete program, similar to the autocomplete that happens when you start typing into google’s search bar or when you come to the end of an email and it automatically populates your closing phrase.

Doesn’t really sound too cutting edge, does it? What makes this cutting edge is the simplicity of the input combined with the sheer depth and complexity of the modal which can produce some amazing results.

Background On AI

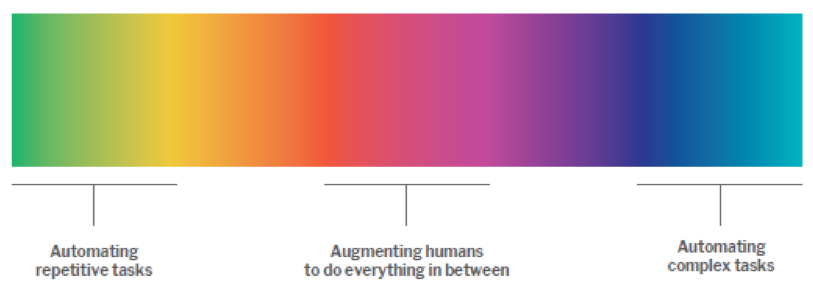

Let’s take a quick step backwards and look at Artificial Intelligence as a whole. I like to think of AI as a spectrum, moving from Narrow Artificial Intelligence on the far left, to General Artificial Intelligence in the middle and Super Artificial Intelligence on the far right of the spectrum.

Now, I don’t want to get into the weeds too much but in essence Narrow AI is what we generally mean when we talk about practical applications of Artificial Intelligence today – task augmentation, take a repetitive task with well-known variables and train the model on that task. There is a certain class of chatbot that fits this description really well, those chatbots that ask you, the user, a question and then you have to choose from a set number of answers. For example: “In the future, will you require sponsorship to work in the US?” Choose “Yes” or “No”. And depending on your choice, the bot moves you onto the next pre-determined step in the process.

That is an example of Narrow AI, otherwise known as Weak AI. They are logic gates that information must flow through. Pretty much all practical uses of AI right now fall into this category of Narrow AI, whether it’s something like a chatbot or a movie recommendation engine or google classifying images, but more on that later.

Then we have General Artificial Intelligence, which is more akin to what we have been brought up to expect from movies like iRobot, or, I don’t know, the Matrix I suppose. General AI, otherwise known as Strong AI, refers to computers that exhibit intelligence that is en par with humans. The idea being that Strong AI can perform any task that a human can. This type of AI is essentially a sentient being, they “think” and have some sort of consciousness. Don’t worry, we are quite a bit away from that just yet, but that is the next major destination on the artificial intelligence journey.

Lastly, on this spectrum, we have Super Artificial Intelligence. This is the hyper intelligence that will far exceed puny human minds in pretty much every aspect imaginable. Maybe the Matrix is a better example here. Or when Skynet gets activated in the Terminator and the machines turn on humans. Either way, while some people might disagree with me, I think it’s a safe bet to say we won’t have anything to fear from Super AI in the foreseeable future.

Explanation of GPT-3

Ok, so now that we have laid the groundwork, let’s turn our attention back to the technology at hand, GPT-3. This is cutting edge Narrow AI, it uses pattern recognition to infer a whole lot from very little. The language model has been trained on lots and lots of data, and like I said before, has 175 billion parameters, all stored as weighted connections at different nodes in GPT-3’s neural network. If you are unfamiliar with neural networks, MIT has a good, if dated, article on them here – http://news.mit.edu/2017/explained-neural-networks-deep-learning-0414.

GPT-3 is a text in, text out program. It requires some programming, either through structured examples or by learning from human feedback (ie. training the modal for your specific use case) but after that it is good to go.

The GPT-3 API is accessed via HTTPS which means 1. it’s secure, HTTPS requires trusted SSL certs to complete the call and 2. it’s easy to use, as most backend (and frontend and full stack) developers will be able to integrate it. We should note, Open AI has released GPT-3 in beta only, so you can sign up for it but it is not available to the general public yet, they are still working out the pricing.

Use Cases – General

I want to get into this in two fashions, general use cases as they apply to the world, and recruiting use cases.

So, tackling the bigger, broader collection first:

Semantic Search – this has been a key differentiator for AI solutions over the last few years.

Keyword search is the most commonly used type of search functionality, put very simply the user inputs a word and the search mechanism loops over the database and displays the objects which contain that keyword. On the other hand, semantic search is where the contextual meaning of the word is used in search instead of the specific letters, so someone can type a question or a few words into a search bar and instead of just filtering over the items in the database or the document to locate words that match that exactly, the search mechanism can find relevant terms and entries that make meaningful sense to that search term.

- Chat: The implications for chat are pretty mind-blowing, and after spending the last 30 minutes playing a text-based adventure game called AI Dungen which is built using GPT-3, I can safely say that this is a major step in the right direction. It is not hard to see this technology becoming standard in all sales, marketing, recruiting, human resources and customer service chat software, so that the user can have a much more fluid conversation with the bot. The remaining challenge will be training the bot on the specific answers needed for that industry or use case.

- Productivity Tools: The major use case here is text to code, so instead of having to work through the syntax of JSX or PHP or whatever language you are using, you can just type what you want and through GPT-3, the program will understand what you mean. The example given on the OpenAI website is of a user typing “what is the date” into the terminal and getting the right response and then typing can you print that and then can you clone the openai gym repo. I found a much more interesting example from Sharif Shameem, where he trained GPT-3 on two samples and created a program that would generate code based on his descriptions. So, for example, he created the Google page layout by typing “the Google logo, a search box, and two lightgray buttons that say “Search Google” and “I’m Feeling Lucky”. And just like that it spits out the code to create that layout. Once again, not exactly rocket science but a significant step forward, and for a nerd like me, really cool!

Use Cases – Recruiting

Enough of the general world use cases, let’s get down to business with how can this be applied to recruiting:

- Emails! So much of our time as Talent Acquisition professionals is taken up with emails, especially for recruiters in relatively high-volume roles, the amount of similar looking yet slightly different emails that get sent is crazy. You can spend the time building out templates and structured drip email campaigns, and many do to great results (Joss from Visage tells me a good cold email results in 20-30% more responses on their platform). But even with that, those emails always feel templated. With GPT-3, there is the possibility to train the program with a sample of your previous variations and then, by typing the first few words, it will autocomplete the rest of the email. The generated email will use words and phrases that capture your tone and sound authentic without completely copying your samples.

- The same goes for job descriptions or job posts. Who likes writing job posts? I think it’s fair to say that at most companies I have had the pleasure of supporting, the status quo is to take an old job post from sharepoint or wherever, dust it off a little, update the dates and title, add a couple of sentences and post it. Not exactly best practice, but probably close to the mark for the majority of job posts out there. GPT-3 can potentially rectify that issue. Once it is trained on a couple of job posts, the model should be able to create a new job post with relatively little input from the poster. The specifics around the job will still need to be updated and as always, garbage in – garbage out.

- Generate boolean or x-ray searches on demand. Instead of trying to string together a whole heap of phrases, keywords and symbols to create the world’s most innovative boolean search and find that purple squirrel, recruiters will be able to type what they are looking for in natural language and have it translated by GPT-3. Maybe that way they can get back to building relationships rather than query strings!

- The dreaded sorting through applications. There’s a common trick among certain candidates applying to jobs that they may not be extremely qualified for, that is to fll the bottom of your resume with all the critical keywords for that role in tiny white font. That way, when the recruiter is searching for someone with management consulting and machine learning experience, your resume comes up to the top of the list, even if you’ve never worked in management consulting nor have you any machine learning experience. Candidates hope that once their resume is in front of a human they’ll get a chance to prove themselves. While that might be the case, the truth is if you are willing to deceive like this, then you might not be the best candidate for that position anyway. So, how can GPT-3 help us get around this while still bringing to the fore the right candidates – semantic search. With GPT-3 recruiters should be able to type in machine learning and candidates with experience in OpenAI, or GPT-3 or python or any of the other tangential skills and experiences will be highlighted, instead of just highlighting candidates with the words machine learning on their resume! Think about how useful this can be, especially for those organizations who have invested a lot of time and capital into creating and maintaining large candidate databases.

Future of Recruiting

So, this is where the rubber hits the road, how does GPT-3 actually make its way into the hands of the recruiter? Well, through recruitment technology vendors who thrive when they add more value to their customers. Each vendor will use technology like GPT-3 differently, to power a different part of their solution and to varying degrees of success. That is what makes this so fun, some will have wild success with this technology, others will unfortunately fail to derive any value from it.

This is just the start for GPT-3, I’m sure it will begin to get more and more air time as OpenAI releases it to the public and we’ll hear all about the wonderful things that can be done with it. One word of caution though, there are pundits who make a living hyping these things up and there are pundits who make a living tearing them down. Focus on the practical implications, and concrete use cases and you won’t go too far wrong.

Summary

It is important for Talent Acquisition leaders to understand the fundamentals of this technology and how it could be applied because it shows us the direction of advancements in technology. Those who understand both hype cycles and adoption cycles should understand this for what it is, the next step in the long journey of advancement. This is not a silver bullet that will solve all recruiting problems, but is indicative of where things are going and what type of skills we need to be building for. The recruiting leader of the future is intellectually curious, always willing to try new tools and technologies, and adapt as the world of work evolves!